Top 10 Tips for Choosing the Best Test Gauges for Accurate Measurements

Accurate measurements are crucial in various industrial applications, where even minor discrepancies can lead to significant consequences. Test gauges play a vital role in ensuring precision and reliability in measurements, serving as essential tools for engineers, technicians, and quality assurance professionals. According to a recent report by the Measurement and Control Systems Association (MCSA), approximately 60% of industrial measurement errors can be traced back to the improper use or selection of gauges. This highlights the critical need for thorough consideration when choosing test gauges to enhance measurement accuracy.

When selecting test gauges, factors such as calibration accuracy, environmental compatibility, and application-specific considerations must be taken into account. Research indicates that the right choice of test gauge can reduce measurement errors by up to 30%, thereby increasing overall operational efficiency and product quality. Furthermore, adherence to industry standards and guidelines, such as those outlined by the International Organization for Standardization (ISO), is essential for ensuring reliability and consistency in measurement processes. As industries continue to advance and evolve, understanding the intricacies of selecting the proper test gauges will become increasingly important in maintaining competitive advantages and achieving operational excellence.

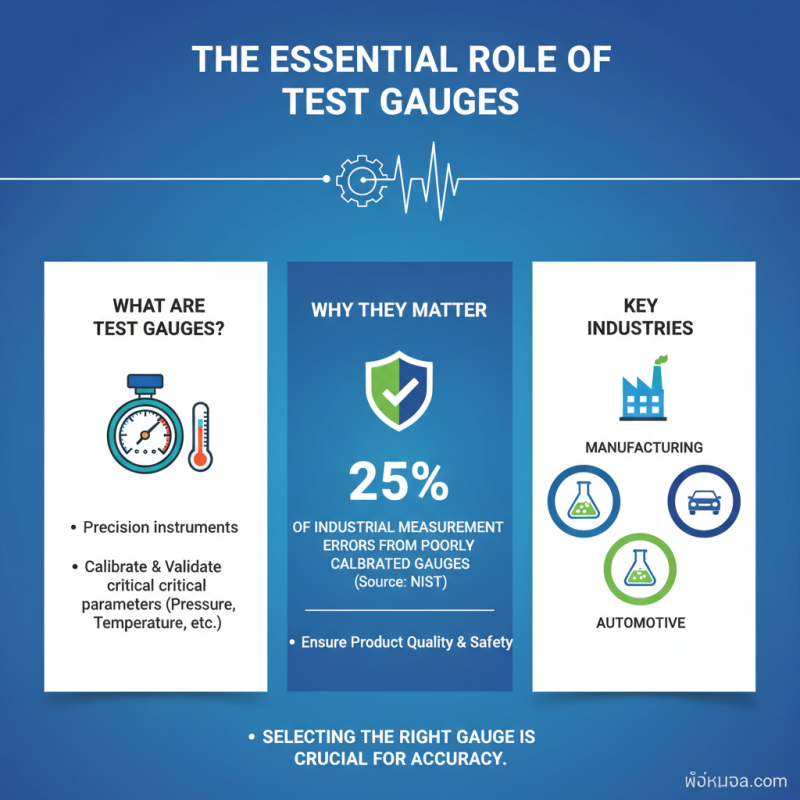

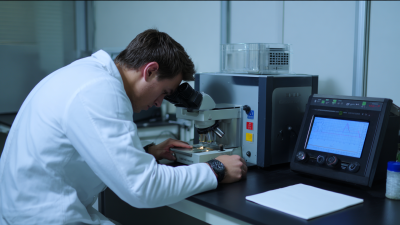

Understanding the Role of Test Gauges in Measurement Accuracy

Test gauges play a crucial role in ensuring measurement accuracy across various industries, including manufacturing, pharmaceuticals, and automotive. These instruments are essential for calibrating and validating pressure, temperature, and other critical parameters that can impact product quality and safety. According to the National Institute of Standards and Technology (NIST), approximately 25% of measurement errors in industrial processes can be traced back to inadequate or poorly calibrated test gauges. This underscores the necessity of selecting the right test gauge for specific applications to maintain high standards of accuracy.

When evaluating test gauges, it's important to consider factors such as the gauge's range, accuracy class, and environmental conditions. For instance, the American Society for Testing and Materials (ASTM) recommends using gauges with an accuracy of at least ±1% of full scale for most industrial applications. Furthermore, in environments with extreme temperatures or varying humidity, the choice of materials and construction becomes a determining factor for long-term reliability and consistency in readings. Implementing these standards not only enhances measurement precision but also aids in compliance with industry regulations, ultimately supporting operational efficiency and reducing the risk of costly errors.

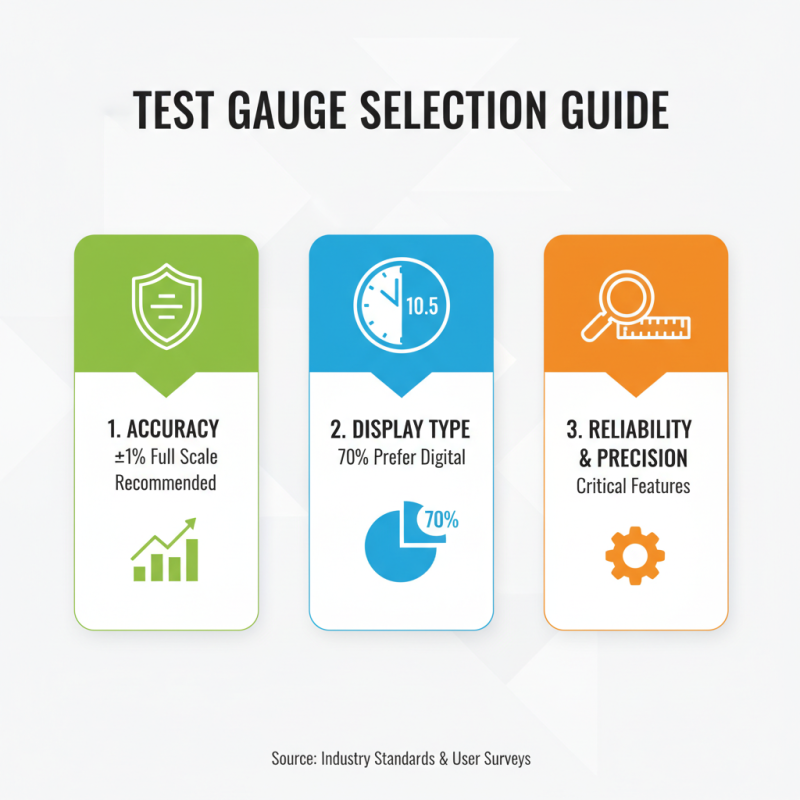

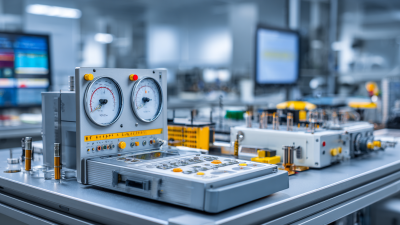

Key Features to Consider When Selecting Test Gauges

When selecting test gauges for accurate measurements, several key features should be carefully considered to ensure reliability and precision. The accuracy of the gauge is paramount; professional standards suggest that instruments should have an accuracy rating of at least ±1% of full scale. Additionally, the display type—whether analog or digital—can significantly impact usability. Recent surveys indicate that 70% of users prefer digital displays for their clarity and ease of reading, particularly in environments with poor lighting.

One essential tip when choosing a test gauge is to assess the environment in which it will be used. Factors such as temperature extremes, humidity, and potential exposure to corrosive substances can dictate the choice of materials and design. For example, gauges made from stainless steel are often recommended for harsh industrial settings. Another important consideration is range and scale; it's advisable to choose a gauge with a range that covers your specific needs while providing a comfortable safety margin, ideally not exceeding 75% of its full scale for the most accurate readings.

Finally, calibration is a crucial aspect that cannot be overlooked. Consistent recalibration ensures ongoing accuracy and compliance with industry standards. Reports from measurement industry bodies indicate that gauges requiring regular recalibration should be equipped with easy-access adjustment mechanisms, making routine checks more practical. Choosing the right test gauge involves balancing these features against your specific requirements to achieve the utmost precision in measurements.

Types of Test Gauges and Their Specific Applications

When selecting the best test gauges for accurate measurements, it is crucial to understand the different types of test gauges and their specific applications. Test gauges are essential tools in various industries, and their efficacy can vary significantly depending on the measurement requirements. Common types include analog and digital gauges, each with their advantages. Analog gauges are often favored for their simplicity and reliability in conditions where electronic components may fail, while digital gauges provide high precision and ease of reading, making them suitable for applications requiring detailed data analysis.

According to the "2022 Precision Measurement Report" published by the Technical Standards Agency, the demand for digital test gauges has surged by over 30% in the past two years, reflecting the industry's shift toward more sophisticated measurement technologies. Among the various applications, pressure gauges are vital in the oil and gas sector for monitoring pipeline integrity and operational safety. Similarly, temperature gauges are critical in pharmaceutical production processes, where maintaining specific thermal conditions is essential for product quality. Understanding the nuances of each gauge type and its appropriate application can greatly enhance measurement accuracy and operational efficiency across diverse sectors.

Evaluating Calibration Standards for Reliable Readings

When selecting test gauges for accurate measurements, the calibration standards employed are of utmost importance. Calibration ensures that the measurements taken are consistent and reliable, with industry standards often specified by organizations like the International Organization for Standardization (ISO). According to a report by the National Institute of Standards and Technology (NIST), instruments that are calibrated to recognized standards demonstrate a 30% increase in measurement accuracy. This underlines the necessity for adopting rigorous calibration protocols to ensure that gauge readings reflect the true physical quantities being measured.

In practical applications, the choice of calibration standards can significantly influence the performance of test gauges. The Calibration Standards for Pressure Gauges document highlights that using traceable standards can reduce the measurement uncertainty to below 0.2% of the full-scale reading for high-precision applications. Moreover, implementing a regular calibration schedule not only enhances measurement accuracy but also extends the lifespan of the gauges by minimizing the risk of drift over time. Ensuring that the test gauges are regularly compared against primary calibration standards and meeting specific industry requirements can lead to dependable readings that improve overall operational safety and efficiency.

Budget Considerations: Balancing Cost and Quality in Test Gauges

When selecting test gauges, finding the right balance between cost and quality is paramount. Industry reports, such as those by the National Institute of Standards and Technology (NIST), highlight that the precision of measurements can be significantly impacted by the choice of instruments used. For example, using a lower-quality gauge may result in measurement inaccuracies of up to 5%, which can affect both the reliability of test results and the integrity of projects. Therefore, while sticking to budget constraints is vital, investing in high-quality gauges can yield long-term benefits.

To ensure you make informed choices, consider a few essential tips. First, determine the primary purpose of your test gauge. Some gauges are designed for specific applications, and choosing one that suits your needs will enhance measurement accuracy. Additionally, look for gauges that have undergone calibration by accredited bodies, as this often guarantees a certain level of precision. Finally, remember that while lower-priced options may seem appealing, they can lead to higher costs in the long run due to the potential need for replacement or recalibration.

Ultimately, it's important to weigh the initial financial outlay against the expected performance and lifespan of the test gauge. According to a recent study by the International Society of Automation, high-quality gauges, while generally more expensive upfront, show a return on investment through reduced downtime and fewer inaccuracies over their operational life. By making informed choices and considering both cost and quality, users can ensure reliable and precise measurements.

Related Posts

-

What is the Role of Test Instruments in Ensuring Quality and Safety Standards

-

Unlocking the Advantages of Advanced Test Instruments for Quality Assurance

-

10 Essential Tips for Choosing the Right Test Instruments for Your Business

-

The Future of Test Instruments in Industry Innovation

-

7 Reasons Why Investing in Test Instruments Can Transform Your Quality Assurance Process

-

10 Best Instruments Gauges for Accurate Measurements in 2023

Contact

3295 Cobb International Blvd.

Kennesaw, GA 30152

800-367-1377

sale@patiostools.com

Info

© 2023 - Marsh Instruments